In the 2011 Jeopardy! face-off between IBM’s Watson and Jeopardy! champions Ken Jennings and Brad Rutter, Jennings acknowledged his brutal takedown by Watson during the last double jeopardy in stating “I for one welcome our new computer overlords.” This display of computer “intelligence” sparked mass amounts of conversation amongst myriad groups of people, many of whom became concerned at what they perceived as Watson’s ability to think like a human. But, as BigR.io’s Director of Business Development Andy Horvitz points out in his blog “Watson’s Reckoning,” even the Artificial Intelligence technology with which Watson was produced is now obsolete.

The thing is, while Watson was once considered to be the cutting-edge technology of Artificial Intelligence, Artificial Intelligence itself isn’t even cutting-edge anymore. Now, before you start lecturing me about how AI is cutting-edge, let me explain.

Defining Artificial Intelligence

You see, as Bernard Marr points out, Artificial Intelligence is the overarching term for machines having the ability to carry out human tasks. In this regard, modern AI as we know it has already been around for decades – since the 1950s at least (especially thanks to the influence of Alan Turing). Moreso, some form of the concept of artificial intelligence dates back to ancient Greece when philosophers started describing human thought processes as a symbolic system. It’s not a new concept, and it’s a goal that scientists have been working towards for as long as there have been machines.

The problem is that the term “artificial intelligence” has become a colloquial term applied when a machine mimics “cognitive” functions that humans associate with other human minds, such as “learning” and “problem solving.” But the thing is, AI isn’t necessarily synonymous with “human thought capable machines.” Any machine that can complete a task in a similar way that a human might can be considered AI. And in that regard, AI really isn’t cutting-edge.

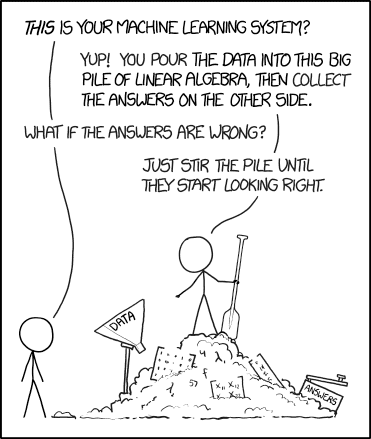

What is cutting-edge are the modern approaches to Machine Learning, which have become the cusp of “human-like” AI technology (like Deep Learning, but that’s for another blog).

Though many people (scientists and common folk alike) use the terms AI and Machine Learning interchangeably, Machine Learning actually has the narrower focus of using the core ideas of AI to help solve real-world problems. For example, while Watson can perform the seemingly human task of critically processing and answering questions (AI), it lacks the ability to use these answers in a way that’s pragmatic to solve real-world problems, like synthesizing queried information to find a cure for cancer (Machine Learning).

Additionally, as I’m sure you already know, Machine Learning is based upon the premise that these machines train themselves with data rather than by being programmed, which is not necessarily a requirement of Artificial Intelligence overall.

Why Know the Difference?

So why is it important to know the distinction between Artificial Intelligence and Machine Learning? Well, in many ways, it’s not as important now as it might be in the future. Since the two terms are used so interchangeably and Machine Learning is seen as the technology driving AI, hardly anyone would correct you if were you to use them incorrectly. But, as technology is progressing ever faster, it’s good practice to know some distinction between these terms for your personal and professional gains.

Artificial Intelligence, while a hot topic, is not yet widespread – but it might be someday. For now, when you want to inquire about AI for your business (or personal use), you probably mean Machine Learning instead. By the way, did you know we can help you with that? Find out more here.